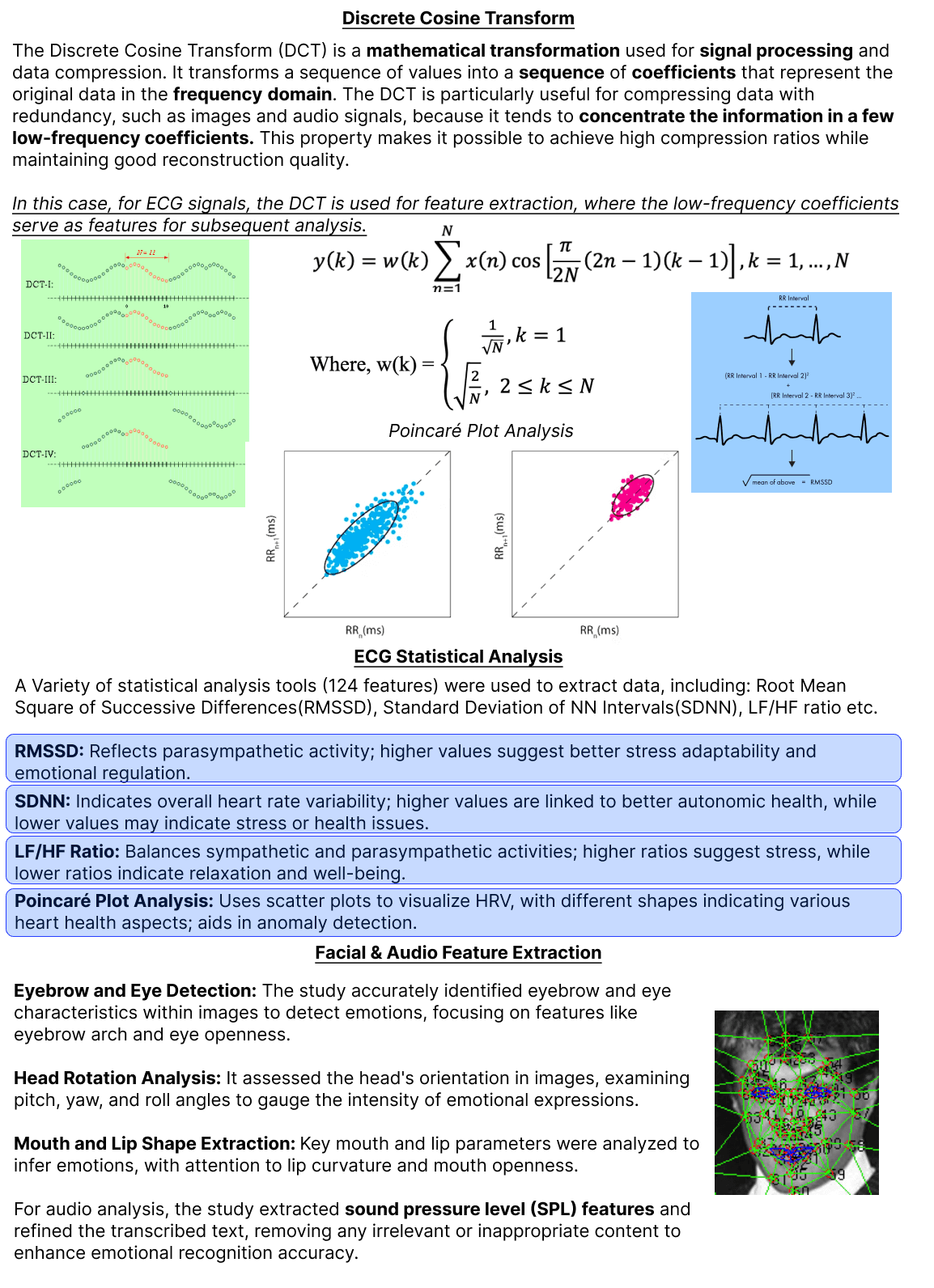

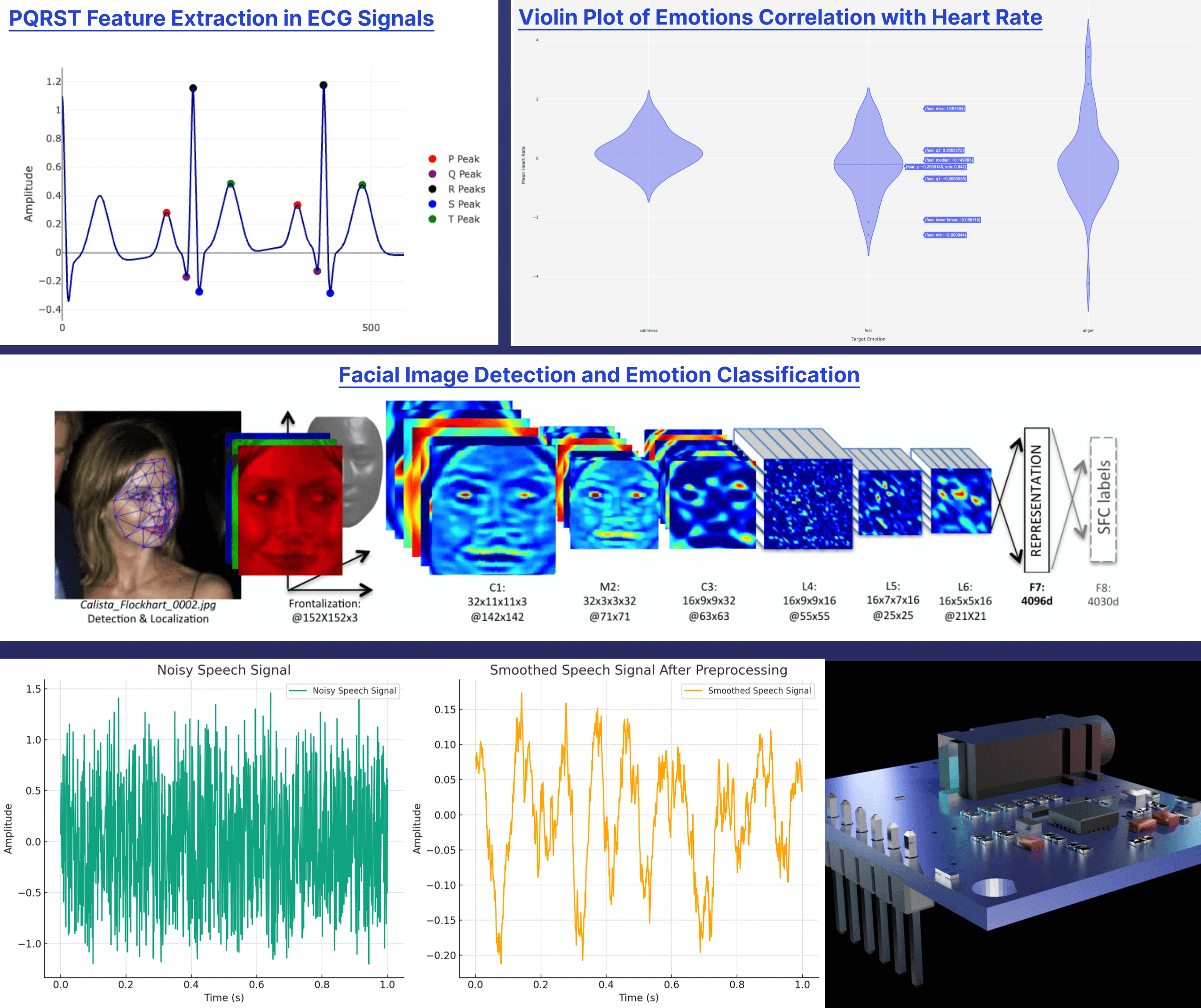

This research explores the use of machine learning to classify three emotional states—happiness, tension (anger and sadness), and calmness—by analyzing ECG signals and facial features. By combining data from the DREAMER and DERCFF datasets, and using the Discrete Cosine Transform for feature extraction, the study achieves a 93.8% accuracy rate. Techniques like CNNs, LightGBM, and ensemble models play a key role in optimizing results. This work significantly contributes to affective computing by enhancing emotion detection through multimodal data analysis.

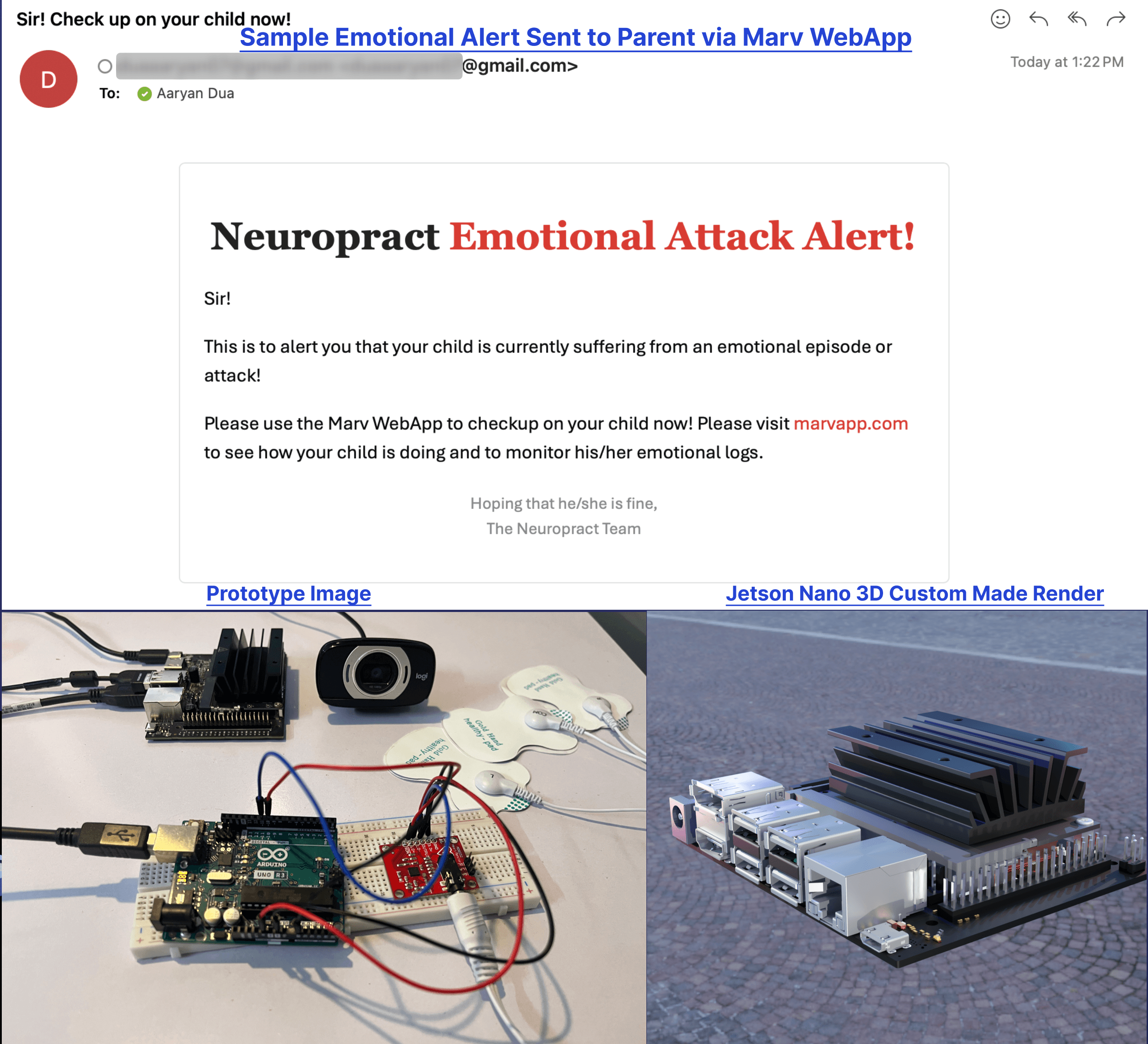

/// The study combined datasets like DREAMER and DERCFF to train machine learning models. Feature extraction was performed using DCT, and CNNs were applied to facial images. Classifiers such as LightGBM, SVM, and Naive Bayes were employed to create an accurate, real-time emotion detection system with high precision.

/// Accurately detecting emotions from human expressions and physiological signals can be challenging due to the variability in individuals' emotional responses. Previous models lacked precision and failed to integrate multiple data sources, such as facial and ECG signals, which reduced their effectiveness in real-world applications.

/// The research introduced a novel approach by integrating ECG and facial feature data, using advanced feature extraction and classifiers like CNNs, LightGBM, and ensemble methods. This multimodal analysis significantly improved the emotion detection system's accuracy, achieving a rate of 93.8%, offering a robust, real-time solution for detecting emotions across diverse individuals.